What is A/B Testing?

by Ted Vrountas

Increasingly, today’s marketers are boosting conversion rates using a technique that experts say is one of the easiest and most effective for optimization: A/B testing.

This comprehensive guide will teach you everything you need to know to begin improving your bottom line with A/B testing — including some of the biggest mistakes even regular practitioners make.

(Keep in mind, while we do our best to explain things simply to our readers without jargon, we’re not perfect. Sometimes industry lingo sneaks its way into our content. If you see any term you don’t understand, you can find a simple definition of it in the Instapage marketing dictionary).

What is A/B testing?

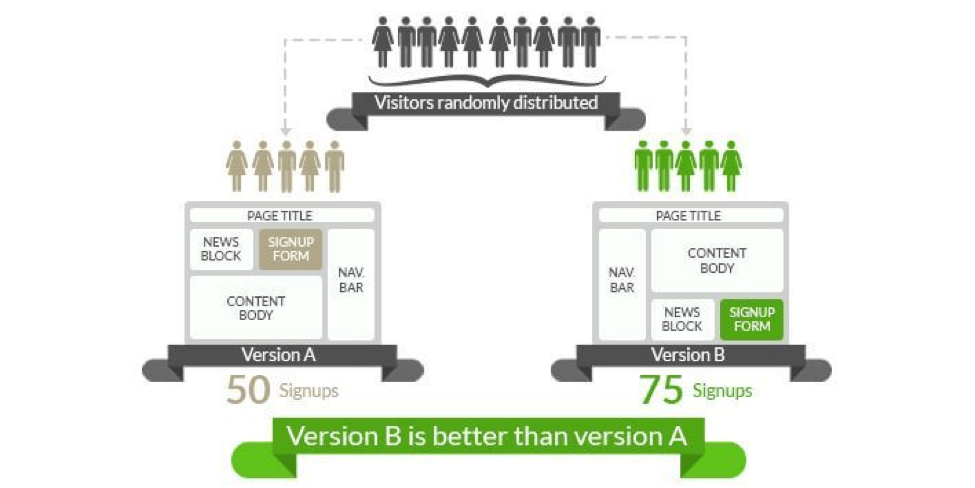

A/B testing is a method of gathering insight to aid in optimization. It involves testing an original design (A) against an alternate version of that design (B) to see which performs better. That original design is also known as “the control” and the alternate version is known as a “variation.”

This guide will discuss A/B testing as it relates to post-click landing page optimization, but you can use the method to compare and improve other web pages, emails, ads, and more.

What can I test?

It’s only natural to wonder which elements on a post-click landing page you can test. While you shouldn’t simply pick from this list at random (you’ll see why later), it should give you an idea of testable elements that can make a difference in your conversion rate.

Consider testing:

Just because you can test it, though, doesn’t mean you should. More on that in chapter 5.

Think you’re ready to start A/B testing? Let’s find out…

A/B testing has the potential to boost anyone’s bottom line, but only when the time is right. Is it right for you? Some questions to ask yourself:

Will A/B testing bring the biggest improvements to my current campaign?

While A/B testing is a valuable aid in optimization, it’s not the only method of optimization. Are there other quick fixes you could make to your campaign before you dedicate time and resources to the A/B testing process?

Have you removed navigation from all your post-click landing pages? Have you figured out your best sources of traffic?

Consider this example from Derek Halpern:

“If I get 100 people to my site, and I have a 20% conversion rate, that means I get 20 people to convert... I can try to get that conversion rate to 35% and get 35 people to convert, or, I could just figure out how to get 1,000 new visitors, maintain that 20% conversion, and you’ll see that 20% of 1,000 (200), is much higher than 35% of 100 (35).”

Sometimes A/B testing isn’t the fastest route to a bottom-line boost.

What are my expectations?

Too many people start A/B testing for the wrong reasons. They see that their competitor used the method to boost signups by 1,000%, or read a case study on a business that generated an extra $100,000 in monthly sales with A/B testing. So, they expect to get a big win from a similar experiment.

But big wins aren’t common. If they seem like they are, it’s because nobody publishes blog posts about the dozens of failed tests it took to get those big wins. Martin Goodson, research lead at Qubit writes:

Marketers have begun to question the value of A/B testing, asking: ‘Where is my 20% uplift? Why doesn’t it ever seem to appear in the bottom line?’ Their A/B test reports an uplift of 20% and yet this increase never seems to translate into increased profits. So what’s going on?

The reason, he says, is because 80% of A/B tests are illusory. Most of those big, 20, 30, 50% lifts don’t exist.

The stat underlines the importance of proper testing method. Just one error can produce a false positive or negative result.

Don’t A/B test if you’re expecting a conversion rate lift of 50, or even 20%. Sustainable lifts are usually much smaller.

Have I done my homework?

post-click landing page best practices already exist. Could you test a post-click landing page that features blocks of text over skimmable copy? You could, but plenty of studies have shown that people prefer to skim than read fully. Block text has the potential to scare your visitors away.

Could you test one version of your post-click landing page with navigation and without? You could, but time and again navigation has been proven to decrease conversion rates. HubSpot tested this a while ago with one page that had navigation against one page that didn’t:

Here were the results:

There’s no use in testing things that have already been shown to work. Read up on how best to build a high-converting post-click landing page before you start running any experiments. If you haven’t already built an anatomically correct post-click landing page, you’re not ready to start A/B testing.

Why am I A/B testing?

Obviously you’re testing to boost your business’s bottom line, but for reasons you’ll discover later, you should have a reason to conduct each one of your tests. And that reason should be rooted in data.

Instead of picking a random element to test, find the weak links in your marketing funnel and choose the type of test that has the potential to fix those links. Jacob Baadsgaard’s clients, the weakest link is usually traffic:

“I discovered that — on average — all of the conversions in an AdWords account come from just 9% of the account’s keywords.

Yes, you read that right — all of the conversions.

To put it simply, for every 10 keywords you bid on, 9 of them produce nothing! Absolutely nothing! And here’s the kicker — that useless 91% of your keywords eats up 61% of your ad spend.”

More on figuring out what to test in chapter 3.

Am I willing to dedicate the time and resources it takes to A/B test properly?

A good A/B test, when conducted correctly, should last several weeks at the very least. Even if you have access to a substantial and steady flow of traffic, just one test can take months to conclude.

Are you ready to dedicate that kind of time? Are you ready to dig into your data, find out why people aren’t converting, and brainstorm ways to fix it?

Are you willing to watch your test carefully as weeks go by, making sure that outside threats to validity don’t poison your results?

If the answer is “yes,” continue on to learn how exactly you should begin preparing for your test.

There’s much more to A/B testing than creating a variation of your original page and driving traffic to it. It involves careful planning, patience, and a little bit of statistics.

Let’s break it down step-by-step.

Step 1: Set conversion goals

Before you start testing just anything, it’s important to dive deep into your data. Why do you want to A/B test?

The answer to that question will come after you figure out how visitors are interacting with your page.

Does Google Analytics show they’re abandoning it immediately? You might want to try testing better message match.

Has heat mapping software indicated they’re not noticing your call-to-action button? You might want to test a bigger size, different location, or more attention-grabbing button color.

The following are just a few examples of tools you can use to gain better customer insight for your A/B tests:

With an idea of how your visitors behave on your page, you can then formulate a pre-test hypothesis.

Step 2: Develop a hypothesis

Now it’s time to ask yourself, “What am I trying to improve with this A/B test?”

Let’s use the example above since it’s conceptually easy for beginners to grasp. If you’re changing your button color because you noticed that it’s not getting noticed, your hypothesis would look something like this:

“After viewing numerous user heat maps, I discovered that visitors aren’t noticing the call-to-action button. Therefore, I believe adjusting the color will draw more attention to it.”

Without a clear hypothesis, there is no clear goal of your test. But, how are you going to do that, and why are you making the adjustments you are?

To a beginner, “what is my goal?” seems so straightforward that it’s not worth asking. Most assume that the ultimate goal of any A/B test is to boost conversions, but that’s not always the case. Take this test from Server Density for example.

Instead of boosting conversions, their goal was to increase revenue. So, they A/B tested a new packaged pricing structure against their old one.

Here’s the old pricing structure, based on the number of servers a customer needed monitored by the company:

Here’s the variation — packaged pricing that started at $99 a month.

After testing one structure against the other, they found the original structure produced more conversions, but that the variation generated more revenue. Here’s the data to prove it:

While conversion rate is, many times, the most important metric to track in an A/B test, it’s not always. Keep that in mind when you’re determining your hypothesis and goal.

Step 3: Make your adjustments

After you’ve determined what you’re changing and why you’re changing it, it’s time to build your variation.

If it’s the layout of your original page (aka the “control” page) you’re adjusting, adjust it in your variation page. If it’s the effect of a new button color that you want to see, alter the color.

If you’re using a professional WYSIWYG (what you see is what you get) editor, you’ll be able to click to edit elements on your page without IT staff. If you’re not, you’ll need the help of a web designer with coding experience to set up your test.

Different types of A/B tests

Some marketers claim that to run a “true” A/B test, you can only test one element at a time — be it a headline, a button color, or a featured image, etc. That’s not entirely correct, though.

Testing one element at a time

Many conversion rate optimizers prefer to test only one element at a time for reasons of accuracy and simplicity, since it can be difficult to test multiple elements at once. When your variation page varies in only one way from your control page, then when one outperforms the other, the reason is clear why.

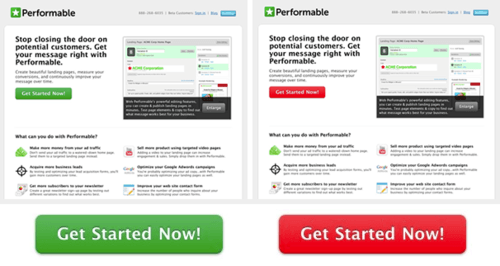

For example, in the hypothetical test above, if your control page and your variation page are identical except for a different colored button, then you know at the end of the test why one page beat the other. The reason was button color, like in this test from HubSpot:

Both pages are identical except for button color. The one with the red button converted better at the end of the test, so that mean the red button is likely the reason for the increase.

If you have the time, resources, and vast amount of traffic needed to conduct tests like this one, then go for it. If you’re like most businesses, though, you’ll want to alter more than one element per test.

Testing multiple elements at once

Unfortunately, it can take months to conclude just one A/B test. That being the case, many smaller businesses without thousands of visitors readily available will choose to change more than one page element at a time, then test the pages against each other.

Contrary to popular belief, this is not a multivariate test, because you’re still testing your control page (A) vs. your variation page (B). The difference between this method and multivariate testing is that once you do declare a winning page, you won’t know why it won. Some call it a “variable cluster” test. This one, conducted by the MarketingExperiments team, tested a control page against a variation with a different headline, more copy, and social proof.

Original:

Variation:

The result was an 89% boost in conversions. However, because many elements were changed in this test from the original, it’s impossible for the MarketingExperiments team to know which new elements were most responsible for the change.

A multivariate test, on the other hand, can tell you how different elements interact with each other when grouped into one test. But it does require some extra steps that make the method a little more complicated than A/B testing. It also requires a lot more traffic.

If the idea of not knowing why one page performs better than the other bothers you, then you’ll want to test one element at a time or learn how to conduct a multivariate test.

But if you don’t have the time or resources to change just one element per test, then altering multiple elements per test may be the way to go.

Many digital marketing influencers, like Moz’s Rand Fishkin, recommend using this method to evaluate radically different page designs in order to find which is closest to the global maximum (the best possible post-click landing page design). Testing singular page elements — trying to find which button color or headline converts best, for example — can help you improve your current page. However, by simply improving your current page, you assume that you were on the right track to begin with. Maybe there’s a completely different design that would perform better. In a blog post titled “Don’t Fall Into the Trap of A/B Testing Minutiae,” Fishkin elaborates:

“Let’s say you find a page/concept you’re relatively happy with and start testing the little things – optimizing around the local minimum. You might run tests for 4-6 months, eek out a 5% improvement in your overall conversion rate and feel pretty good. Until…

You run another big, new idea in a test and improve further. Now you know you’ve been wasting your time optimizing and perfecting a page whose overall concept isn’t as good as the new, rough, unoptimized page you’ve just tested for the first time.”

As the image above shows, testing a singular element usually yields a minimal lift, if any. It’s a lot of time for a little return. On the other hand, testing a radically different design centered around a new approach can yield a big conversion boost.

Take this Moz post-click landing page for example, tested against a variation created by the team at Conversion Rate Experts:

The variation was six times longer and generated 52% more conversions! Here’s what the testers had to say about it:

“In our analysis of Rand’s effective face-to-face presentation, we noticed that he needed at least five minutes to make the case for Moz’s paid product. The existing page was more like a one-minute summary. Once we added the key elements of Rand’s presentation, the page became much longer.”

If they had simply tried to improve the existing elements on that original page with tests like button color vs. button color, or call-to-action vs. call-to-action, etc., then they would never have built a longer, more comprehensive, and ultimately higher-converting post-click landing page.

Keep that in mind when you’re designing your test.

Step 4: Calculate your sample size

Before you can conclude your test, you need to be as close to certain that your results are reliable — aka, that your new page is actually performing better than the old one. And the only way to do that is by reaching statistical significance.

What is statistical significance?

Statistical significance is a measure of, to a degree of confidence, how sure you can be that your A/B test results aren’t a fluke. At an 80% level of significance (also referred to as “confidence level”) you can be 80% sure that your results are due to your change and not chance.

To reach statistical significance, you’ll need an adequate sample size — aka, number of visitors to your page — before you can conclude your test. And that sample size will be based on the level of significance you want to reach.

The more certain you want to be about the results of your test, the more visitors you’ll need to generate. To be 90% sure you’ll need to generate more visitors than you would need to be 80% sure.

Keep in mind, the industry-standard level of statistical significance is 95%. But many times professionals will run tests until they reach 96, 97, and even 99%.

While you can never be completely certain your new variation is better than your original, you can get as close as possible by running your test for as long as time allows. Even experts have noted many times in which their variation page was beating the original at a 99% level of significance, then lost to the original after the test was run for longer. Peep Laja describes one:

“The variation I built was losing bad — by more than 89% (and no overlap in the margin of error). Some tools would already call it and say statistical significance was 100%. The software I used said Variation 1 has 0% chance to beat Control. My client was ready to call it quits. However since the sample size here was too small (only a little over 100 visits per variation) I persisted and this is what it looked like 10 days later.

The variation that had 0% chance of beating control was now winning with 95% confidence.”

The longer you run your test, the bigger your sample size. The bigger your sample size, the more confident you can be about the results. Otherwise, Benny Blum notes with a simple example, a small sample size can deliver misleading data:

Consider the null hypothesis: dogs are bigger than cats. If I use a sample of one dog and one cat – for example, a Havanese and a Lion – I would conclude that my hypothesis is incorrect and that cats are bigger than dogs. But, if I used a larger sample size with a wide variety of cats and dogs, the distribution of sizes would normalize, and I’d conclude that, on average, dogs are bigger than cats.

You can determine your sample size manually with some heavy math and a knowledge of statistics, or you can quickly use a calculator like this one from Optimizely.

Either way, to figure out how many visitors you’ll need to generate before you can conclude your test, you’ll need to know a few more things — like baseline conversion rate and minimum detectable effect.

What is your baseline conversion rate?

What’s the current conversion rate of your original page? How many visitors click your CTA button compared to how many land on your page?

To figure it out, divide the number of people who have converted on your post-click landing page by the total number of people who have visited.

What is your desired minimum detectable effect?

Your minimum detectable effect is the minimum change in conversion rate you want to be able to detect.

Do you want to be able to detect when your page is converting 10% higher or lower than the original? 5%? The smaller the number, the more precise your results will be, but the bigger sample size you’ll need before you can conclude your test.

For example, if you set your minimum detectable effect to 5% before the test, and at its conclusion your testing software says that your variation page is converting 3% better than your original, you can’t be confident that’s true. You can only be confident of any increase or decrease in conversion rate that exceeds 5%.

Now you can punch in both these numbers, along with your desired level of confidence (we recommend at least 95%), and the calculator you use will generate a number of visitors you need to reach before you can conclude your test.

However, reaching that number of visitors doesn’t always mean it’s safe to conclude your test. More on that next.

Step 5: Eliminate confounding variables

It’s important to remember that you’re not running your test in a lab. And because of that, there are outside factors that threaten to poison your data and ultimately the validity of your test. Failing to control for these things to the best of your ability can end in a false positive or negative test result.

Things like holidays, different traffic sources, and even phenomenons like “regression to the mean” can make you see gains or losses where there really are none.

This is a step that spans the entirety of your test. Validity threats will inevitably pop up before you reach statistical significance, and some of them you won’t even notice. To learn about a few of the most common to watch for, see Chapter 4.

The difference between validity and reliability

If you’re reading along wondering, “Reliability? Validity? Aren’t those the same thing?”, allow us to clarify.

Reliability comes from reaching statistical significance. Once you’ve generated enough traffic to form a representative sample, you’ll have attained a reliable result.

In other words, if you were to run your A/B test again would you get the same result? Would your variation still beat your original? Or would that original beat the variation?

A test that reaches 95% significance or higher is said to be reliable. The outcome will likely be the same even if you run the test again.

Validity, on the other hand, has to do with the setup of your test. Does your experiment measure what it claims to measure.

In our earlier example — when you set up a test that aims to measure the impact of button color, does it actually? Or could your test results be attributed to a factor outside of button color?

Even if your variation page is beating your control at a 99% level of significance, it doesn’t mean the reason it’s winning is your button color.

Step 6: QA everything

The test before the test is one of the most crucial steps in this process. Make sure all the links in your ads drive traffic to the right place, that your post-click landing pages look the same on every browser and that they’re displaying properly on mobile devices.

Now test the back end of your page. Fill out your form fields and make sure the lead data is being passed through to your CRM. Are pixels from other technologies firing? Are the other tools you’re integrating with registering the conversion?

Forgetting to check these and realizing later that your links are dead or your information isn’t being properly sorted can completely ruin your test. Don’t let it happen to you.

Step 7: Drive traffic to begin your test

Now it’s time to begin your test by sending visitors to your page. Remember, both your pages’ traffic sources should be the same (unless you’re A/B testing traffic sources, of course). An email subscriber will behave differently on your page than someone who’s unfamiliar with your brand. Don’t drive email traffic to one and PPC traffic to another. Make sure you’re driving the same type of traffic to both your control and variation, otherwise you risk threatening your test’s validity.

Step 8: Let your test run

Be patient. Even if you have the massive amount of traffic required to reach statistical significance in a matter of days, don’t conclude your test just yet.

Days of the week can impact your conversion rate. People visiting your post-click landing page on Monday and Tuesday can behave differently than ones visiting it on Saturday and Sunday.

Let it run for at least three weeks, and test in week-long cycles. If you start your test on Monday and you reach statistical significance three weeks later on a Wednesday, continue driving traffic to your pages until the following Monday.

And remember, the longer you run your test, the more reliable your results will be.

Step 9: Analyze the results

Once you’ve reached statistical significance, you’ve let your test run for at least three weeks, and you’ve reached the same day of the week you started on, it’s safe to call your experiment.

Remember, though, the longer you run it, the more accurate the results will be.

What happened? Did your variation dethrone your control? Did your original reign supreme? Did you eliminate major confounding variables, or could your results be attributed to something other than what you set out to test?

Regardless of those answers, the next step is the same.

Step 10: Keep testing

Just because you improved your conversion rate doesn’t mean you found the best post-click landing page. More tests have the potential to produce bigger gains.

If your variation lost to your control, the same thing goes. You didn’t fail, you just found a design that doesn’t resonate with your audience. Figure out why, and use that knowledge to inform your tests going forward.

Remember, just because your test reached statistical significance doesn’t mean that it measured what you thought it did. It’s reliable at that point — meaning that if you tested those two pages against each other again, chances are you’d come up with the same result.

That is, of course, unless one of the following validity threats poisoned your data. Here’s what you need to watch out for.

The Instrumentation effect

If you fall victim to the instrumentation effect, it means that somewhere along the line, the tools you used to conduct your test failed you — or, you failed them.

That’s why it’s important not to skip step 6. Have you checked and double-checked that your experiment is set up correctly? Are all your pixels firing? Is your data being passed to your CRM system?

After you’ve confirmed that everything is set up the way it should be, keep a close eye on your tools’ feedback throughout the test. If you see anything that looks out of the ordinary, check to see if other users of your software have had similar problems.

Regression to the mean

Saying that your test “regressed to the mean,” is just a fancy way of saying “the data evened out over time.”

Imagine that you developed a new variation and your first 8 post-click landing page visitors convert on your offer, and the page’s conversion rate is an astonishing 100%. Does that mean that you’ve become the first one to create a perfect post-click landing page?

No. It means that you need to run your test for longer. When you do, you’ll find that your test will regress back to the “mean,” or, within the “average” range after a while.

Keep in mind, this regression to the mean can happen at any time. That’s why it’s important to run your test for as long as possible. Digital marketer, Chase Dumont, found that it occurred six months after he began testing:

At first, the original version outperformed the variable. I was surprised by this, because I thought the variable was better and more tightly written and designed.

He adds:

And, despite that big early lead in conversions to sales (as evidenced by the big blue spike up there – that’s the original version outperforming the variable), with time the variable eventually caught up and surpassed the original sales page’s numbers.

The longer your test runs, the more accurate it will be.

The novelty effect

This can be a confusing validity threat. Let’s use our button color example again to demonstrate.

Imagine you change your button color to green after 5 years of featuring a blue button on all your post-click landing pages.

When your variation goes live, there’s a chance that your visitors click the new green button not because it’s better, but because it’s novel. It’s new. They’re used to seeing blue but not green, so it stands out in their mind because it’s different than what it used to be.

Combat the novelty effect by targeting first-time visitors who aren’t used to seeing your blue button. If they click it more often than your blue button, then you’ll know that one is better than the other. They’re not used to seeing either color.

The history effect

Remember, factors completely out of your control can affect your A/B test’s validity. Those who fall victim to the history effect are people who don’t keep an eye out for real-world issues that can poison their data. These things include, but aren’t limited to:

If you’re running ads on Twitter and the social network goes down, it’ll affect the outcome of your test. If you’re testing a post-click landing page that offers a webinar on a holiday, it probably won’t generate the same number of conversions that a webinar scheduled to take place on a workday will. Keep the history effect in mind when you test your own post-click landing page.

The selection effect

Testers whose data is ruined via the selection effect have accidentally chosen a particular sample that’s not representative of their target audience.

For example, if you’re a B2B software provider and you run ads on a website that’s largely visited by B2C marketers, this will skew your test’s results.

Make sure that when you’re building your test, you target an audience that represents your target customer.

It’s not uncommon, even for regular A/B testers, to make these costly mistakes. Be warned — the following have the potential to waste your time, resources, and data.

Blindly following best practices, or not following them at all

So you saw that your competitor boosted their conversion rate with an orange button, and they declared in a case study that orange is the best button color. Well their business, no matter how similar to yours, is not yours.

“Best practices” tested by other businesses aren’t necessarily best for your business. At the same time, you’re not trying to reinvent the wheel. Professional optimizers have run countless tests for you already, and from the results of those tests we know things like:

But things like “the best button color” and “the perfect headline”? They’re all subjective. They don’t necessarily apply to you.

Testing things unlikely to bring a lift

Google once tested 41 shades of blue to determine which was most likely to impact conversion rate. Should you?

No. If you’re like most small to medium-sized businesses, tests like that would be a waste of your resources.

You should not approach A/B testing with a “test everything” mindset. Instead, focus on adjusting things most likely to bring the biggest lifts in conversion rate. Most of the time that’s not subtle changes to colors or typeface.

Sure, you’ll read case studies on the internet about how a business generated millions in profit by changing one word on their post-click landing page, or by removing a singular form field — but results like that are incredibly rare (and they may not even be accurate).

Unless you have an abundance of time and resources at your disposal, steer clear of tests like those.

Before you get started A/B testing, ensure efficiency by investing in professional software. With Instapage, you can create post-click landing pages to A/B test in minutes. Then, with the help of the industry’s most advanced analytics dashboard and start A/B testing today, you can track metrics more accurately than ever before.

Choose from countless professional templates, click to edit any page element, and customize fully to your branding specifications:

With one click, create a variation to test. Then, manage each with a simple drop-down menu with four options: Duplicate, Pause, Transfer, Delete:

Once you begin your test, use the industry’s most advanced analytics dashboard to track key performance metrics, like:

View your results on easy-to-read graphs that compare conversion rate over time and unique visitors, and even adjust display rate percentages and baselines straight from the dashboard.

Create a professional post-click landing page and get started get started A/B testing like the pros today.

Try the world's most advanced landing page platform today.